Complete Visual Testing and Continuous Integration with Seltest

26 Jun 2015In this post we will introduce a class of frontend tests that cover more surface area with less effort, as well as explain how to integrate them with continuous integration using SauceLabs. Our Cycledash project is over 75% covered by unit tests, but this other class of test covers functionality and styling we may miss with unit tests. We call these visual tests.

Visual Tests

Visual tests take place in the browser, with the test client carrying out actions that a user might perform. This is similar to typical Selenium tests, except our focus isn’t on asserting that certain elements exist or that an action can be carried out. Instead, we take a screenshot at the end of each test, and compare it to the golden master screenshot. The test fails if the actual screenshot differs from this expected image even by a single pixel.

The advantage of visual tests is that they let you capture a lot of the UI and underlying functionality of the application without explicitly enumerating all the properties you would like to verify. This can manifest in, for example, catching CSS or HTML regressions that couldn’t be caught by any reasonable standard of unit tests. For example, changing the border of a div could reflow an entire page’s layout; this change couldn’t be caught with a simple unit test, but would be trivially caught by any visual test on the pages affected by this change.

Our visual tests are written using Seltest, a simple visual testing framework written in Python that’s based on Selenium. Tests specify the URLs we would like to hit and various interactions with those pages, such as filling in a form or clicking a button. Each step of the test automatically takes a screenshot of the current viewport, and compares it against a “golden master” screenshot to see if anything has changed. If so, the test errors.

A small test suite could look something like the following.

class Examine(seltest.Base):

window_size = [1280, 800]

base_url = BASE + '/runs/1/examine'

wait_for = {'css_selector': '.query-status', 'classes': ['good']}

@waitfor('tr:first-child td:nth-child(20)', text='0')

def sorted(self, driver):

"""Examine page sorted by decreasing Normal Read Depth."""

rd = driver.find_element_by_css_selector('[data-attribute="sample:RD"] a')

rd.click()

@waitfor('[data-attribute="info:DP"] .tooltip')

def tooltip(self, driver):

"""Examine page showing a Normal Read Depth tooltip."""

dp = driver.find_element_by_css_selector('[data-attribute="sample:RD"]')

hover = ActionChains(driver).move_to_element(dp)

hover.perform()

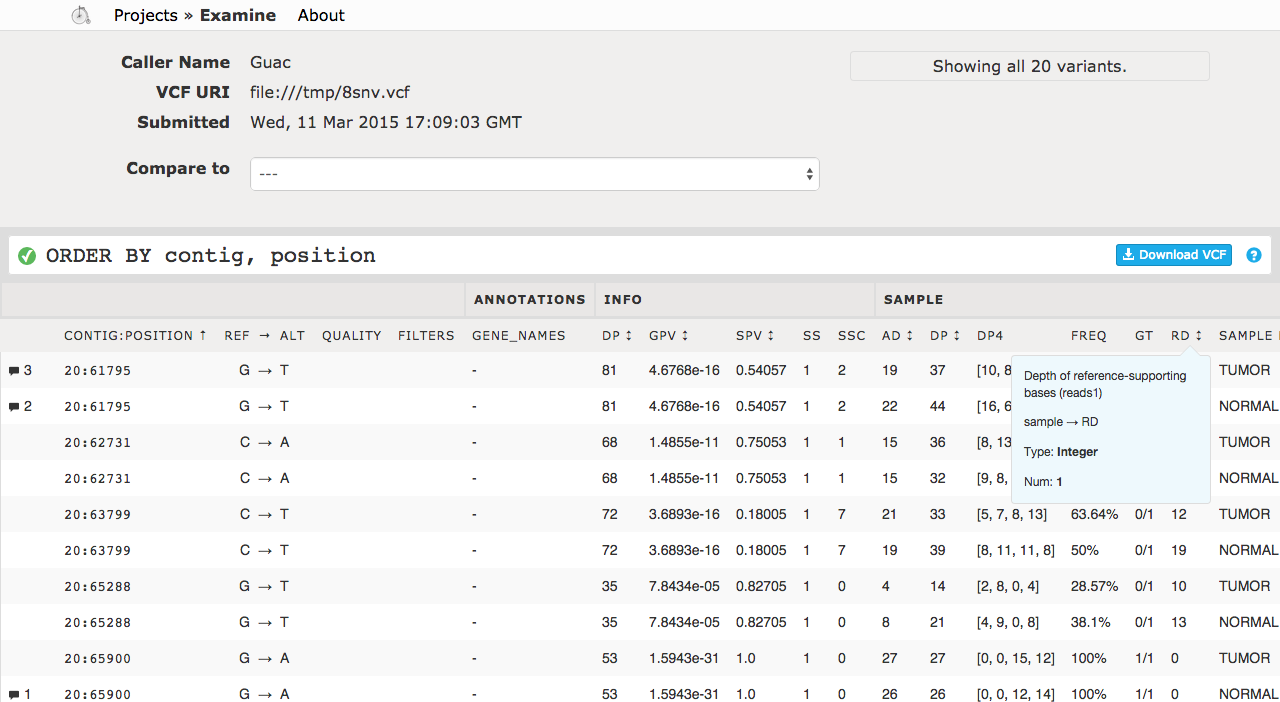

The third test, tooltip, would generate the following screenshot from Cycledash.

These tests are run locally with Seltest’s “update” command to update the golden master screenshots, and remotely run with the “test” command on Travis CI continuous integration in order to verify that the screenshots being produced from a browser communicating with the test server running on a Travis worker are the same as the golden masters we’re expecting.

Cloud Chrome

One problem with these tests is that developers may be running their tests on different operating systems or on different versions of the same browser. Even a point release of an OS can result in slight differences between images. We’ve seen an off-by-one pixel change when upgrading from OS X 10.9.2 to OS X 10.9.3. Additionally, running tests on a browser such as Chrome means trying to keep a specific old version around that doesn’t auto-update. Even point releases of an OS or browser can cause small visual changes.

Using a headless browser like PhantomJS ameliorates some, but not all of these problems. For example, upgrading the operating system as noted above resulted in visual differences even in Phantom. More importantly, users aren’t actually using Phantom to navigate the web; they’re using Chrome or Firefox. Issues arise in Phantom that never would in Chrome or Firefox, and vice versa. Ideally, then, our tests will take place in a real browser.

One solution to this problem is driving a remote browser. In this way the operating system and browser version can remain constant without any error-prone local environment tweaking and maintenance. To do this we use SauceLabs, a service which exposes an HTTP API to interact with a browser that Selenium-based tests can drive. In this way we are able to precisely control, for every developer and for our continuous integration server, the operating system and browser version running our tests.

How We Do It

Our workflow for Cycledash has two main components: manually updating screenshots, and automatically testing against them on our continuous integration server.

Once a change has been made to the application, the developer updates screenshots with a simple script. This script does three things:

- Starts up a test server instance

- Opens a tunnel to SauceLabs using SauceConnect

- Runs the Seltest update command, which executes the tests and updates the golden master screenshots.

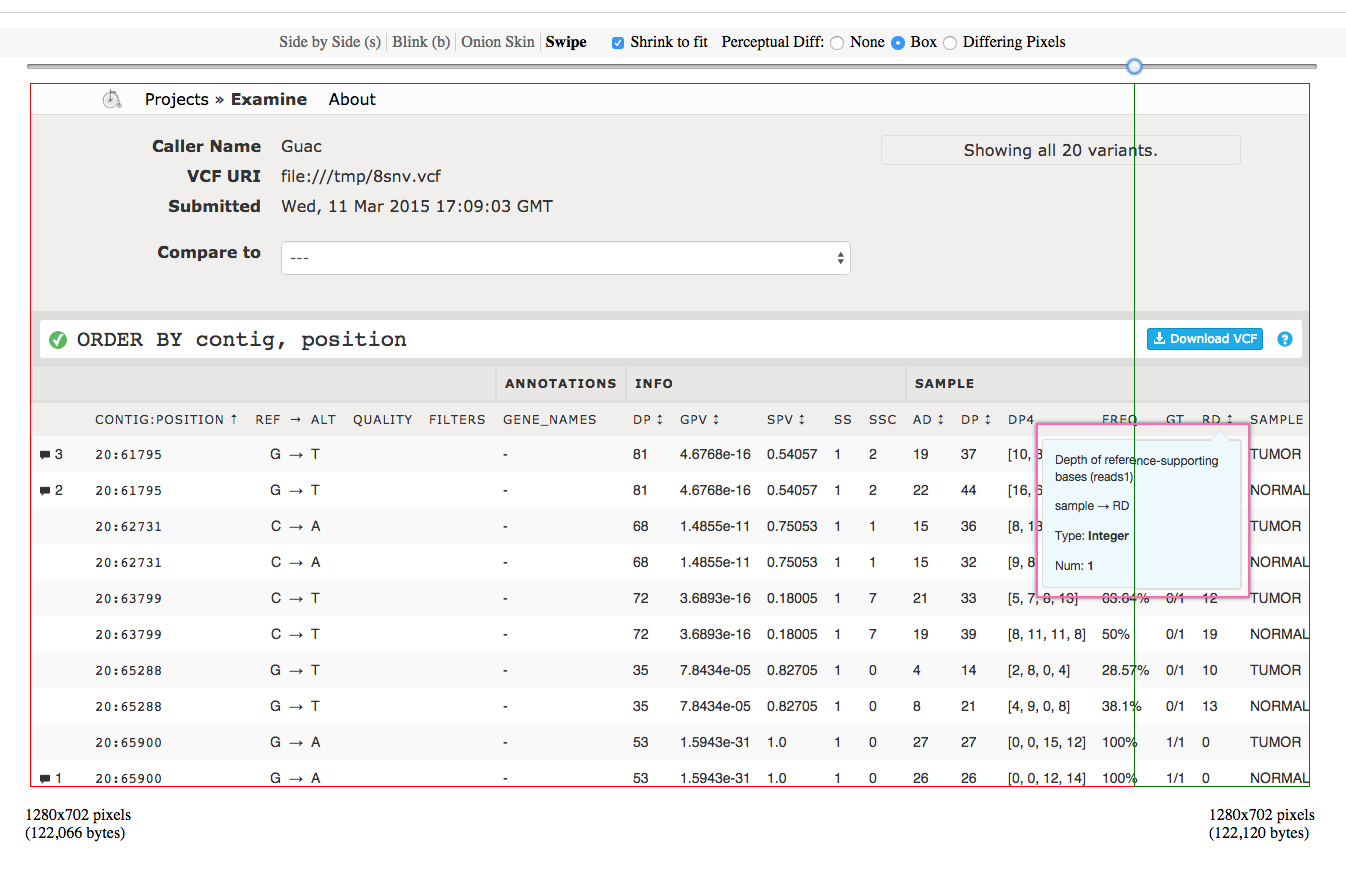

At this point, the new screenshots can be compared to the old ones using a visual diff tool like Webdiff (the lab’s favorite) to easily see that only what was expected to change has changed. In the following screenshot, we can see that our change of the tooltip’s border from 1px to 2px has been successfully caught.

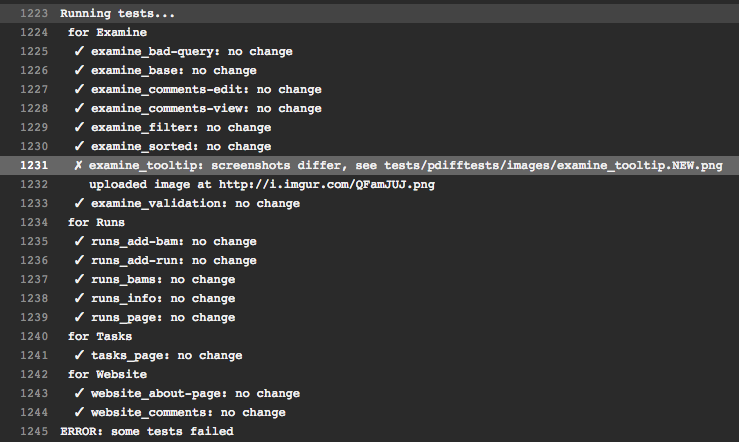

Assuming that this change was intended, the developer would commit this new golden master screenshot to git and push to GitHub. Travis CI would pull these changes and run its usual linting and unit tests, and then the Seltest tests to ensure that the screenshots it is generating match those expected by the developer. If the screenshots hadn’t been updated in the above example, Travis would fail with an error showing up in the logs like the following.

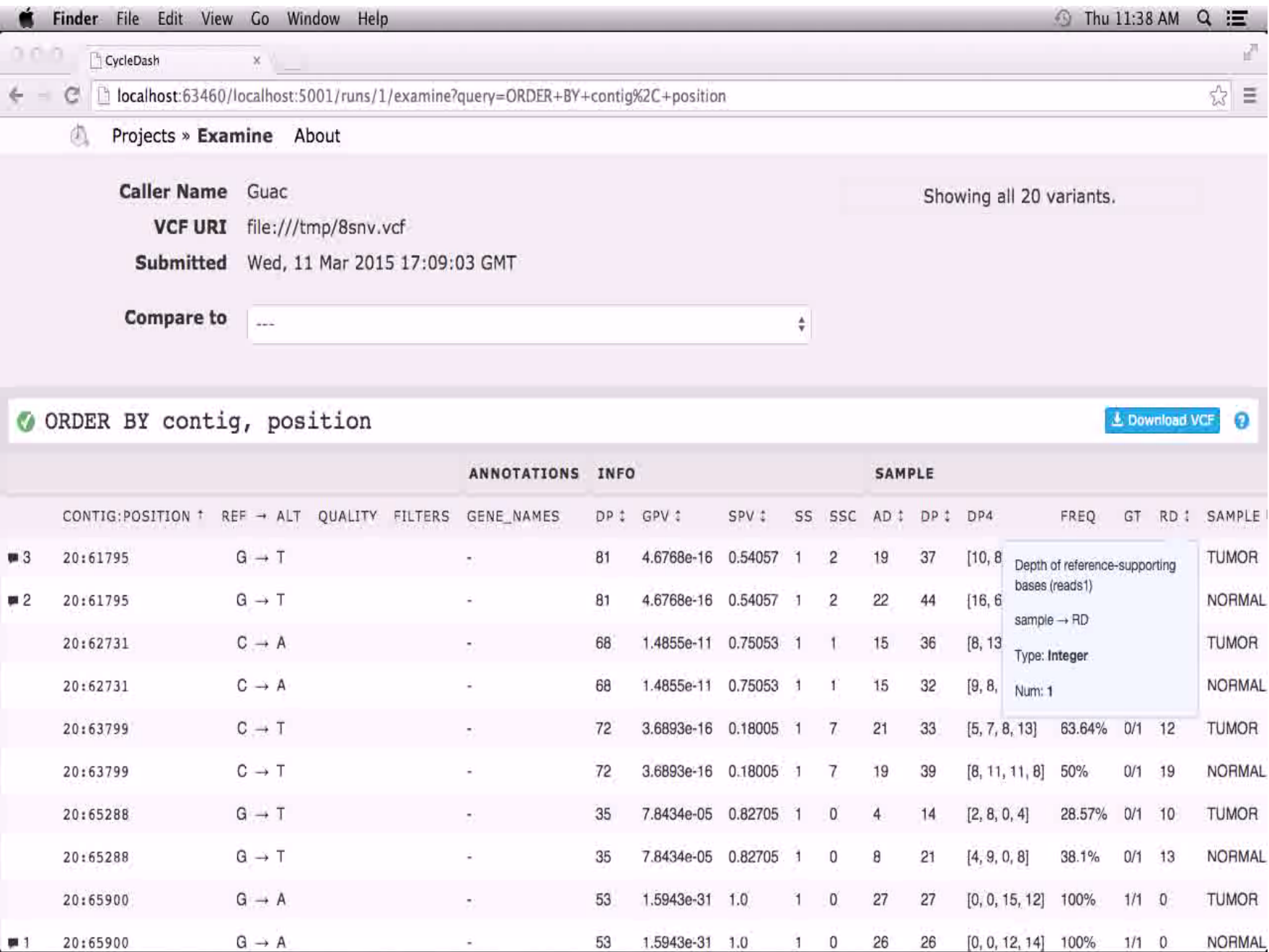

The Imgur screenshot is uploaded by Seltest for easy debugging. Additionally, SauceLabs + Seltest give the developer several other options for debugging. One can watch the tests execute remotely on SauceLabs; a screenshot of such a live video follows. One can even interact with the browser directly, or can run Seltest in interactive mode so that the developer can interact with the remote browser programmatically from an IPython REPL.

The Final Word

This setup allows us to be confident that our changes aren’t unexpectedly changing what someone interacting with our web application would see and experience. And it lets us do it in a completely automated and programmatic manner. This setup would work for mobile web applications, multiple browsers at once, via other headless or remote browser services, and with any sort of web server.

Visual testing allows you to quickly cover large portions of your codebase and catch visual errors without having to manually interaction with your web server. Add in continuous integration and remote browsers, and you are now automatically testing for visual regressions that you might not have been able to catch before.